“The Evolutionary Psychology of Mass Mobilization: How Disinformation and Demagogues Coordinate Rather Than Manipulate

Michael Bang Petersen

Current Opinion in Psychology

Available online 20 February 2020

https://www.sciencedirect.com/science/article/pii/S2352250X20300208

Highlights

• Violent mobilization is often attributed to manipulation from, for example, demagogues.

• The human mind contains psychological defenses against manipulation, also in politics.

• Mass mobilization requires that the attention of group members is coordinated.

• Demagogues and disinformation can be explained as tools for achieving coordination.

• Mobilized individuals are predisposed for conflict rather than manipulated into conflict.

Large-scale mobilization is often accompanied by the emergence of demagogic leaders and the circulation of unverified rumors, especially if the mobilization happens in support of violent or disruptive projects. In those circumstances, researchers and commentators frequently explain the mobilization as a result of mass manipulation. Against this view, evolutionary psychologists have provided evidence that human psychology contains mechanisms for avoiding manipulation and new studies suggest that political manipulation attempts are, in general, ineffective. Instead, we can understand decisions to follow demagogic leaders and circulate fringe rumors as attempts to solve a social problem inherent to mobilization processes: The coordination problem. Essentially, these decisions reflect attempts to align the attention of individuals already disposed for conflict.

(…)

In this review, I ask: What are the psychological processes underlying large-scale mobilization of individuals for conflict-oriented projects? The focus is on the specific psychological role fulfilled by (a) strong leaders, (b) propaganda and (c) fringe beliefs in the context of successful mobilization processes. Understanding this role is of essential importance in current political climates where we witness a combination of political conflict, the emergence of populist leaders and concerns about the circulation of “fake news” on social media platforms.

A frequently-cited perspective is that large-scale mobilization for conflict-oriented projects reflects the use of propaganda by demagogues to manipulate the opinions of lay individuals by exploiting their reasoning deficiencies. Here, I review the emerging evidence for an alternative perspective, promoted especially within evolutionary psychology, which suggests that the primary function of leaders and information-circulation is to coordinate individuals already predisposed for conflict (1, 2**, 3). As reviewed below, human psychology contains sophisticated defenses against manipulation (4**) and, hence, it is extremely difficult to attain large-scale mobilization without the widespread existence of prior beliefs that such mobilization is beneficially. Furthermore, a range of counter-intuitive features about demagogues, disinformation and distorted beliefs is readily explained by a coordination perspective.

(…)

In general, leadership and followership evolved to solve coordination problems (21, 27) and there are reasons to expect that authoritarian leaders will solve these coordination problems to the benefit of those who seek aggression (19). Authoritarian leaders often have aggressive personalities themselves and, hence, are more likely to choose this focal point rather than others. Also, authoritarian leaders are more likely to aggressively enforce collection action, thereby also providing a solution to the free-rider problem. Consistent with this coordination-for-aggression perspective on preferences for dominant leaders, such leader preferences are specifically predicted by feelings of anger rather than, for example, fear (28, 29, 30), suggesting that people decide to follow dominant leaders to commit to an offensive strategy against the target group (28).

This perspective also explains highly counter-intuitive features of the appeal of demagogues. If followers search for the optimal leader to solve conflict-related problems of coordination, they will seek out candidates who are willing to violate normative expectations by engaging in obvious lying (31**) and who displays a personality oriented towards conflict, even if such personalities under other circumstances would be considered unappealing (2**).

(…)

Another propaganda tactic is moralistic in nature. Thus, in less violent forms of groupbased conflict, including in the context of modern social media discussions, an often-used tactic is to direct attention towards a group’s or person’s violation of moral principles. Moral principles are effective tools for large-scale coordination because they suggest that the target behavior is universally relevant (1, 34*, 35). Consistent with the coordination perspective, however, recent research suggests that the motivations to broadcast such violations can reflect attempts to mobilize others for self-interested causes. Thus, the airing of such moral principles, referred to as moralgrandstanding, is strongly motivated by status-seeking (36*) and there is increasing evidence that the acceptance of moral principles shifts flexibly with changes in self-interest (37).

(…)

Consistent with this, recent evidence shows that political affiliation is a strong predictor of statements of belief in fringe stories such as conspiracy theories and “fake news” (3, 42**).

(…)

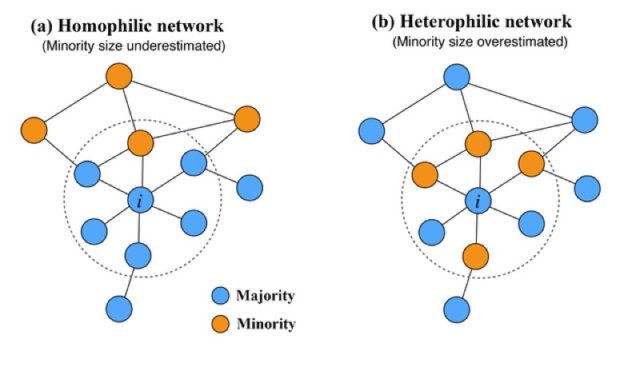

Overall, the effects of the coordination problem on mobilization processes are dual. On the one hand, the existence of the coordination problem means that groups and societies can be stable even if they contain large minority segments of individuals who share disruptive, violent or prejudiced view. On the other hand, the existence of the coordination problem also implies that this stability can be quickly undermined if suddenly coordination is achieved. Not because people are manipulated; but because a sufficient number of them direct attention to a particular set of preferences simultaneously.”